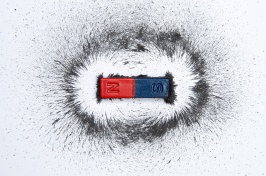

Adobe Stock photo.

Earlier this week, a major Amazon Web Services outage caused a significant dirsruption to apps and websites around the world. UNH's Aleksey Charapko, assistant professor of computer science whose work focuses on designing systems to be less vulnerable to such disruptions, weighed in on the recent situation. This summer, Charapko received a prestigious Faculty Early Development, or CAREER, award from the National Science Foundation in relation to his work in this area.

The recent AWS outage made headlines — what does it reveal about the vulnerabilities of cloud-based systems?

This recent AWS outage is not the first such large outage of a cloud computing provider. We have several major players in that space — Amazon, Microsoft, Google, and a few smaller ones like Oracle. A large outage at one of the big three will be visible, with many applications and services used in everyday life impacted. This happens because most apps and services, ranging from financial systems to e-commerce to home-automation to streaming platforms, depend on the cloud.

Unfortunately, this also means that increasing the reliability of the cloud infrastructure is paramount for these apps and systems. Cloud infrastructure, however, is incredibly complicated and interconnected, making it possible for failures in one place of the cloud to "cascade down" or spread to other cloud components and sub-systems. This cascading effect is exactly what happened on Oct. 20 with Amazon Web Services.

Why is reliability research such a critical and growing field, and how is UNH/your research contributing to it?

It is also important to understand that the cloud, like any complex machine, will sometimes break. However, we cannot just stop this machine to fix it because we rely on it for hosting critical apps and services. Therefore, we must find a new way to improve reliability and minimize the impact on services when failures do occur.

Our group at UNH, in collaboration with other institutions and industry, identified a key set of characteristics that are common to many large failures and outages. Our findings indicate that many cloud systems and mechanisms tend to resist recovery after failure. Moreover, these cloud systems usually require a disproportionate amount of effort to recover from the failure, compared to the failure itself. We can compare this to a cereal box standing upright — a simple jolt may make the box fall on the side, yet much more effort is required to put it back up. We call these failures "metastable failures" and have shown that they were behind several major outages. I would not be surprised if the recent AWS outage was also, at least partly, caused by metastable failure.

What lessons do experts in reliability and mitigation take away from events like this?

Dealing with failures at the scale of AWS is very hard. If anything, these events are humbling for us, showing that we still have a long way to go in understanding the mechanisms involved in outage progression, developing effective mitigation strategies, and communicating with users about the problem to avoid inadvertently making recovery more difficult.

How did your work at UNH contribute to developing new mitigation strategies at AWS?

Our work on metastable failures has resulted in substantial interest in this topic at AWS. As far as I am aware, AWS has a dedicated team of researchers and engineers working on it. Over the past few years, they have published several papers specifically discussing the mitigation steps they take to prevent potentially significant metastable failures. All of this work builds on the foundation that we have established here in my lab.

What types of services are most affected when major outages occur?

From the consumer side, I am not sure we can pinpoint any specific kinds of services or applications that may be more affected. However, I would note that services handling large volumes of data may be more at risk, as data-storage cloud infrastructure is more susceptible to failures and typically more difficult to recover. This is because transferring large amounts of data from one location to another to recover from a failure is both resource- and time-consuming, and cloud resources may be more scarce in an outage in the first place.

Is there anything everyday users — like online shoppers or streamers — can do to protect themselves?

As consumers of these services, there is not much we can do to help prevent these problems. There are some small things we can do to help with recoveries, though. A recovery from a major outage can take a very long time, partly because cloud systems and services cannot just be turned on like a light switch — if we do that, all the demand will overwhelm them and cause a secondary failure. As everyday users, we should be patient and avoid checking all failed apps excessively until after the cloud or app vendors notify the public that the problem has been resolved. This will avoid putting extra load on the infrastructure that these engineers are trying to fix.

-

Compiled By:

Brooks Payette | College of Engineering and Physical Sciences